mmWave sensing: Millimeter wave (mmWave) networks, because of their higher operating frequency and directional design, offer unprecedented sensing capabilities. In our research, we have shown that they can enable highly accurate ambient sensing, even comparable to vision in many cases, facilitating a range of new applications. Using mmWave sensors, we developed a mmWave-based home assistant for deaf and hard-of-hearing (DHH) people that can not only recognize hand signs but also capture whole-body motion. Additionally, because of the higher available bandwidth, mmWave sensors operating as FMCW radars can be used to detect minute change in displacements. In our research, we show that this can be exploited in eavesdropping conversations from outside the area of conversation.

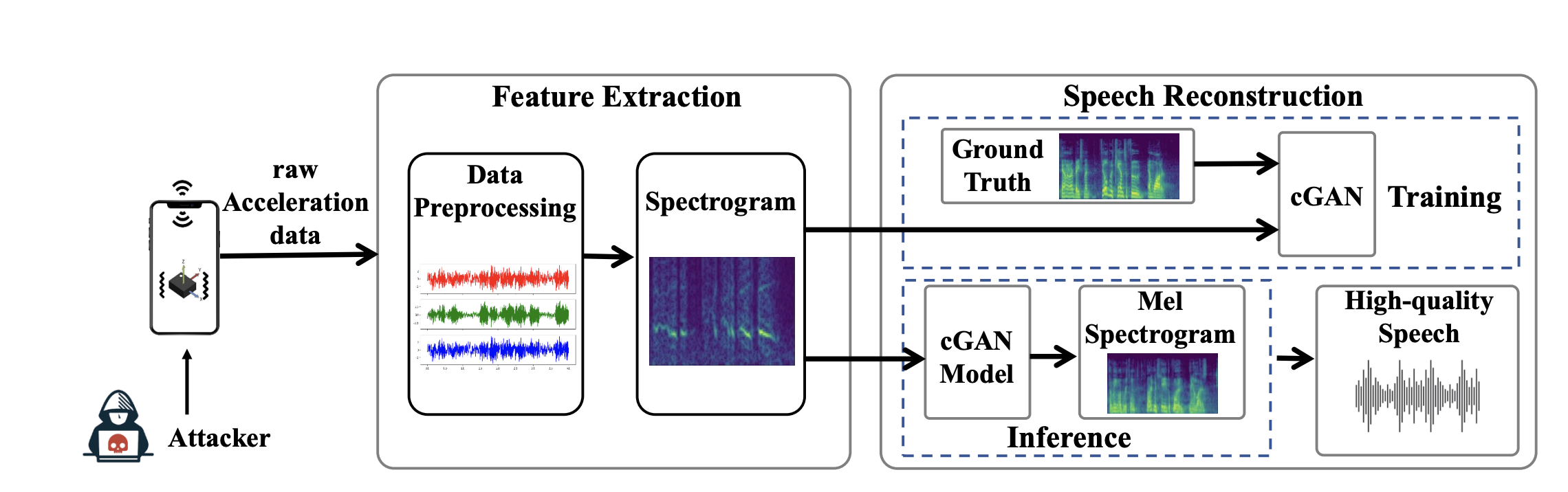

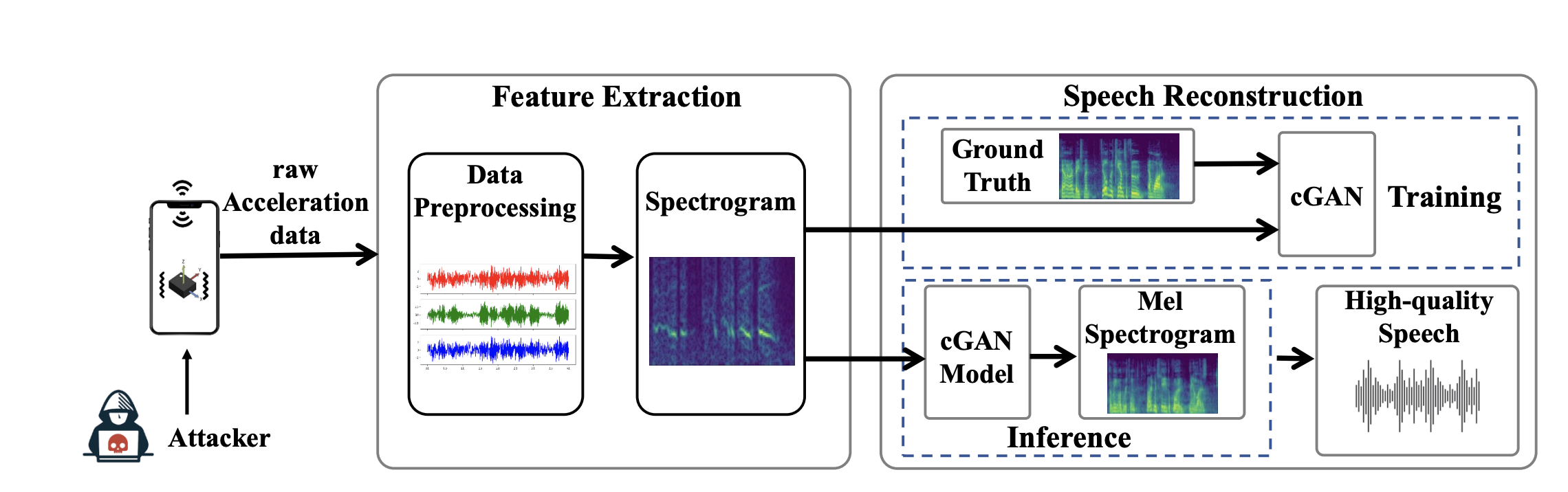

Wearable/IMU sensing: Inertial measurement units (IMUs) can enable ubiquotus sensing because of their presence in smartphones, smartwatches, and earpods. Our research team showed that, exploiting this ubiquity it is possible to eavesdrop on conversations using the accelerometer data measured by the IMUs. For this our team proposed a generative model which takes as input the noisy spectrogram obtained from the accelerometer data and enhances it to recover the clear underlying audio. Additionally, taking advantage of this ubiquity recently our team is investigating the possibility of using wearables for building accessible solutions for DHH people.

Publications:

IEEE S&P '22

Sensing for mobile systems:

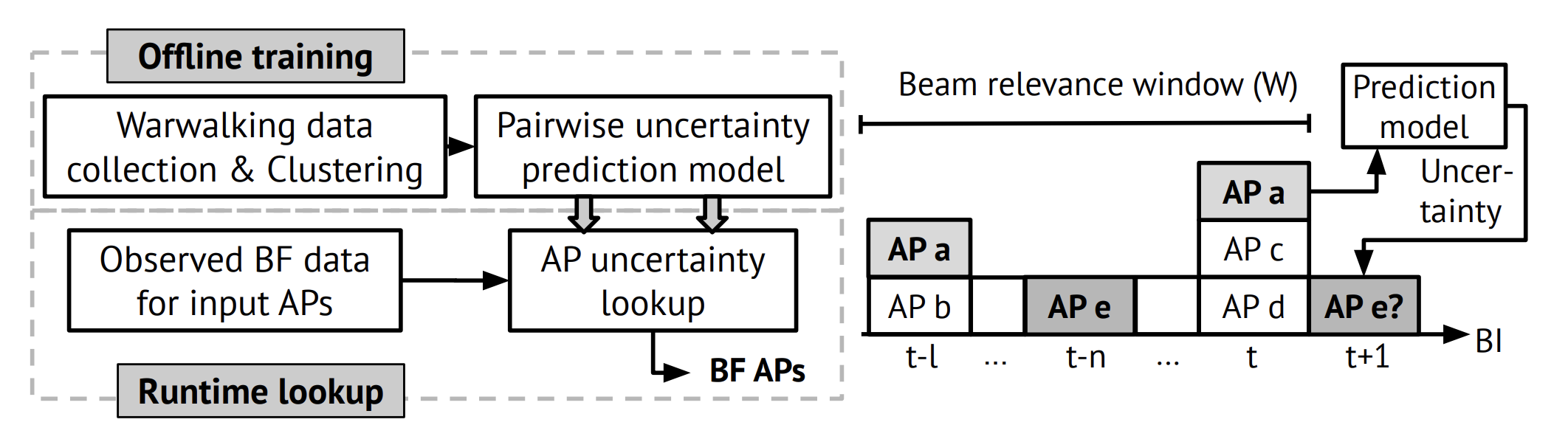

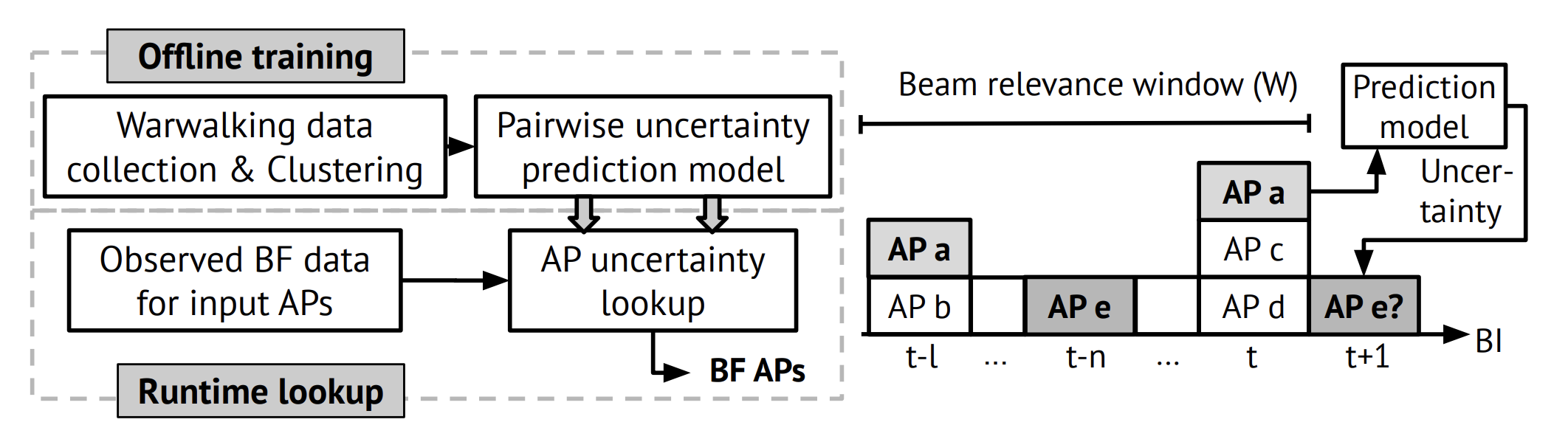

Because of their directional nature of communication and susceptibility to blockages, user mobility and beam tracking are challening problems. Additionally, inorder to provide better coverage dense deployments of access points is highly recommended. In such dense deployments, beam tracking could be computationally expensive and extremely challenging. In our research, we showed that we could reduce the beamforming overhead by exploiting the correlation in beamforming between colocated access points.

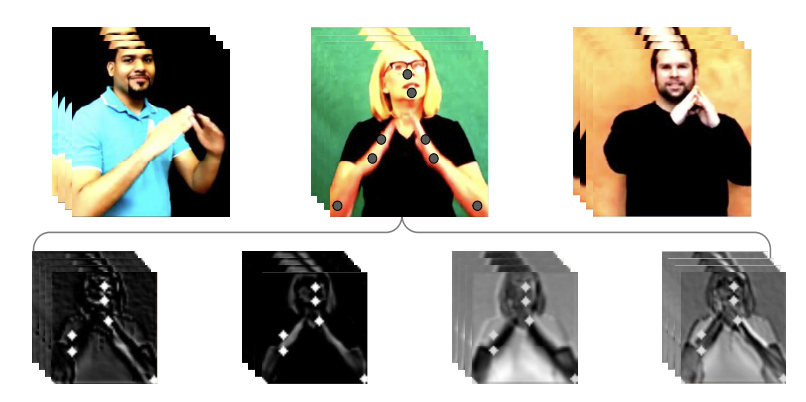

Automatic ASL recognition:

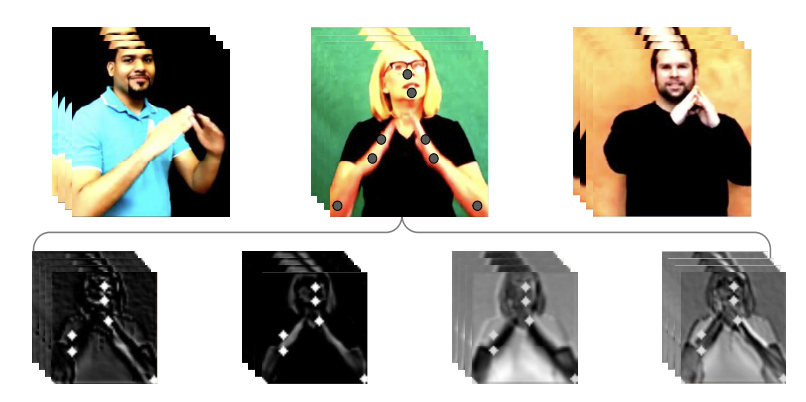

American sign language (ASL) is a visual mode of communication utilized by Deaf and hard-of-hearing (DHH) people. Building automatic ASL recognition systems, could bridge the gap between ASL and non-ASL speakers and also extend the usage of existing voice-based technologies using DHH people. Our research team has developed multiple computer vision solutions which incorporate ASL domain specific knowledge in improving camera based automatic ASL recognition performance.